The AI Revolution: What Happens When We're Not the Smartest Anymore?

By Richard Sebaggala

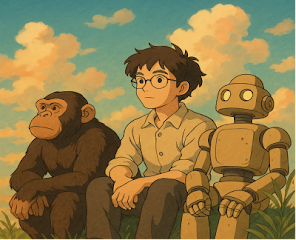

Not long ago, historian and bestselling author Yuval Noah Harari, known for his insightful books on the history and future of humanity, made a simple but unsettling comparison. He reminded us that chimpanzees are stronger than humans, yet we rule the planet—not because of strength but because of intelligence. That intelligence allowed us to organize, tell stories, cooperate in large groups, and build civilizations. Now, for the first time since we became the dominant species, something else has emerged with the potential to outmatch us—not physically, but cognitively.

Artificial Intelligence is not just another technology like the internet or the printing press. It is not simply an upgrade to how we compute, search, or automate. It is something more fundamental—a new kind of intelligence that doesn’t think like us, doesn’t learn like us, and doesn’t need to share our goals to reshape the world we live in. This isn’t merely about faster tools. It’s about a shift in cognitive authority, where machines are beginning to generate content, solve problems, and offer decisions that many humans now accept as credible—often without question.

In a previous article, I argued that AI is forcing us to rethink what intelligence really means. We are used to associating intelligence with human traits—consciousness, memory, creativity, even ethical reasoning. But AI doesn’t need any of these to function impressively. It uses statistical patterns and probability, not lived experience. It doesn’t need to “understand” to produce results that look meaningful. This has introduced a quiet but deep disruption: intelligence is no longer something uniquely human.

This realization calls for a different kind of response. We need to stop asking whether AI will “replace” humans and start asking how we, as humans, can live meaningfully and ethically in a world where we may no longer be the only—or even the dominant—form of intelligence.

To do that, we’ll need to rethink our policies. AI isn’t just another app to regulate; it’s a system capable of influencing elections, research, shaping public opinion, managing hospitals, and delivering education. We need strong, clear rules about what AI is allowed to do, and under what conditions. These rules must go beyond technical fixes and deal with deeper questions about accountability, fairness, and the role of humans in decision-making.

We also need to rethink education. If AI can access all the world’s information and summarize it instantly, what becomes the role of the classroom? What becomes the value of memorizing facts or writing essays? In this new world, human education must shift toward what AI still lacks: ethical thinking, emotional intelligence, creativity, empathy, and the ability to live with ambiguity.

Economically, things are shifting too. In the past, knowledge work—thinking, writing, analyzing—was a scarce and valuable resource. But now, the cost of generating “thinking” is falling. AI can produce text, summaries, even insights, at scale. This creates an economic twist. As thinking becomes abundant, what becomes rare is discernment—the ability to judge what matters, what’s true, and what’s worth acting on. Human judgment, not information, may be the next frontier of value.

But perhaps the biggest adjustment we need is psychological. For generations, we’ve grown up believing that humans are the smartest species on the planet. That belief shaped everything—from how we designed schools and workplaces to how we structured religion, law, and government. Now, as we encounter a form of intelligence that doesn’t look like us but can outperform us in many ways, we need to make space in our worldview for another mind. That takes humility, but also imagination.

We must remember that intelligence doesn’t have to be a “zero-sum” game. In economics, a zero-sum situation is one where if someone gains, another must lose. But intelligence can grow without taking away from others. AI getting smarter doesn’t mean humans are getting dumber. In fact, if we use it wisely, AI can help us become more reflective, more creative, and more human. But only if we remain active participants—questioning, interpreting, and shaping its use, rather than passively consuming its outputs.

What happens next is not inevitable. It depends on the choices we make—politically, socially, and personally. We don’t need to compete with machines, but we do need to learn how to live with them. That means adapting, not surrendering. Guiding, not resisting. Coexisting, not collapsing.

If we manage to do that—if we protect what makes us uniquely human while embracing what machines can offer—we might discover that the rise of another kind of mind is not the end of human relevance. It could be the beginning of a deeper kind of human flourishing.

Interesting reading. Thank you for sharing

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteInteresting Writing

ReplyDelete